import matplotlib.pyplot as plt

from scipy.stats import norm, expon, chi2

import numpy as np

from iminuit.cost import ExtendedUnbinnedNLL

from iminuit import Minuit

from iminuit.util import make_with_signature

from iminuit.typing import Annotated, Gt

from sweights.testing import make_classic_toy

from sweights.util import plot_binned

# bounded parameter type for models fitted with iminuit

PositiveFloat = Annotated[float, Gt(0)]The classic sWeights method is a powerful way to project out a component from a mixture of signal and background, but it is often not obvious whether it can be applied, because it requires that signal and background pdfs both factorize in the discriminatory variable \(m\) and the control variable \(t\).

We show two kinds of tests, which can be applied. The first test can always be applied, but is slow to compute. The second test can only be applied under special conditions, but is fast.

Likelihood ratio test

We show that a likelihood ratio test can be used to test the hypothesis that the sWeights are applicable. This technique is applicable to any problem and only requires prerequisites that are anyway needed to compute sWeights.

While the test can only detect a factorization violation with a given confidence (there the usual type I and II errors), it should be safe to apply sWeights if the test passes. When it passes, a potential factorization violation is too small to be detected, which means that applying sWeights should give good results, too.

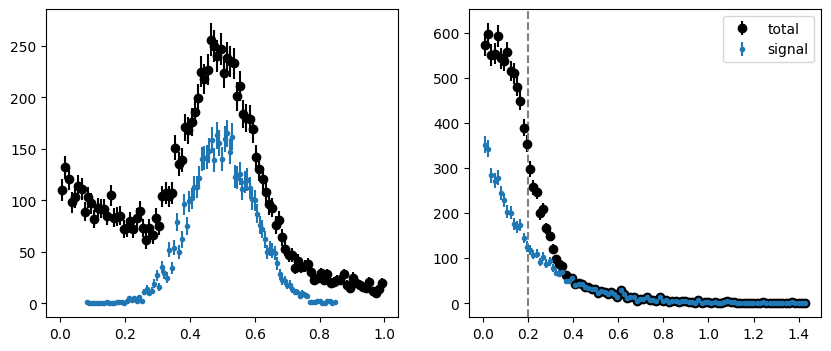

We make a toy dataset to demonstrate the method. We then split the dataset into two subsets along an arbitrary value in the control variable. A good choice is the median, but any value will do. The splitting value is indicated in the plots with a dashed line.

mrange = (0.0, 1.0)

trange = (0.0, 1.5)

tsplit = 0.2

toy = make_classic_toy(1, mrange=mrange, trange=trange)

fig, ax = plt.subplots(1, 2, figsize=(10, 4))

plt.sca(ax[0])

plot_binned(toy[0], bins=100, color="k", label="total")

plot_binned(toy[0][toy[2]], bins=100, marker=".", color="C0", label="signal")

plt.sca(ax[1])

plot_binned(toy[1], bins=100, color="k", label="total")

plot_binned(toy[1][toy[2]], bins=100, marker=".", color="C0", label="signal")

plt.axvline(tsplit, ls="--", color="0.5")

plt.legend();

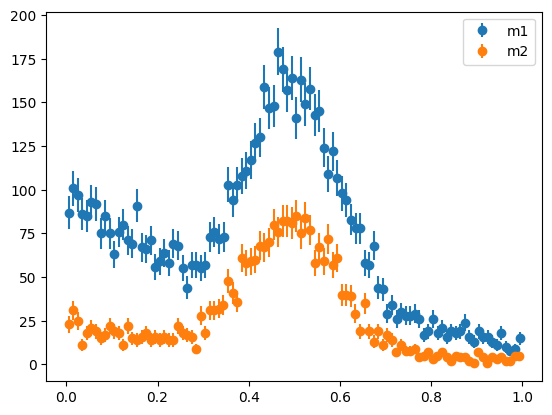

We use a mask to split our toy dataset and plot both parts along the \(m\) variable.

mask = toy[1] < tsplit

m1 = toy[0][mask]

m2 = toy[0][~mask]

plot_binned(m1, bins=100, label="m1")

plot_binned(m2, bins=100, label="m2")

plt.legend();

For sWeights to be applicable, the pdfs in the \(m\) variable must not depend on the \(t\) variable. The respective yields are allowed to vary, but the shape parameters of the pdfs for signal and background must be identical. We can test this null hypothesis against the alternative (shape parameters are not the same) with a likelihood ratio test. This only requires parametric models for the signal and background densities in the \(m\) variable, which are needed anyway to compute sWeights. Hence, this technique is easy to use in practice.

Here, the \(m\) distribution consists of a normal distribution for the signal and exponential background, which have the parameters \(\mu,\sigma\) and \(\lambda\), respectively. In case of the null hypothesis, the two datasets share these parameters, but the signal and background yields \(s,b\) are allowed to differ. In case of the alternative hypothesis, all parameters are determined separately for the two partial datasets. The \(Q\) statistic \[ Q = -2\ln\frac{\sup L_{H_0}}{\sup L_{H_1}} = -2\ln\frac{\sup\{ L_1(s_1, b_1, \mu, \sigma, \lambda) L_2(s_2, b_2, \mu, \sigma, \lambda) \}}{\sup \{ L_1(s_1, b_1, \mu_1, \sigma_1, \lambda_1) L_2(s_2, b_2, \mu_2, \sigma_2, \lambda_2) \}} \] is asymptotically chi-square distributed with 3 degrees of freedom, since that is the difference in dimensionality in the parameter space between \(H_0\) and \(H_1\). Note, that the likelihood ratio test requires \(H_0\) to be nested in \(H_1\), and that is the case here.

We first fit \(H_0\) with iminuit. We use an extended unbinned maximum likelihood method, but one could also use a binned maximum likelihood method or the classic method instead of the extended method. Our choice is merely the most convenient.

For the cost function ExtendedUnbinnedNLL from iminuit, we create model, a function that returns the integral of the density as the first argument and the density as the second argument (see the documentation of ExtendedUnbinnedNLL). We use type annotations to indicate that some parameters have lower bounds.

def model(

x,

s: PositiveFloat,

b: PositiveFloat,

mu: PositiveFloat,

sigma: PositiveFloat,

slope: PositiveFloat,

):

ds = norm(mu, sigma)

snorm = np.diff(ds.cdf(mrange))

db = expon(0, slope)

bnorm = np.diff(db.cdf(mrange))

integral = s + b

density = s / snorm * ds.pdf(x) + b / bnorm * db.pdf(x)

return integral, densityThen we create the ExtendedUnbinnedNLL, one for each partial data set, add them (adding log-likelihoods is equivalent to multiplying the likelihoods) and fit the sum.

iminuit detects the parameters from the function signature. Since the same function model is used in both instances of ExtendedUnbinnedNLL, all parameters would be shared. But we don’t want that to happen for the yields, so we use the utility function make_with_signature to rename the yield parameters of our model, to make them distinct.

nll1 = ExtendedUnbinnedNLL(m1, make_with_signature(model, s="s1", b="b1"))

nll2 = ExtendedUnbinnedNLL(m2, make_with_signature(model, s="s2", b="b2"))

mi0 = Minuit(

nll1 + nll2,

s1=1000,

s2=1000,

b1=1000,

b2=1000,

mu=0.5,

sigma=0.1,

slope=0.5,

)

mi0.strategy = 0 # sufficient since we don't need accurate parameter errors

mi0.migrad()| Migrad | |

|---|---|

| FCN = -1.567e+05 | Nfcn = 184 |

| EDM = 5.35e-06 (Goal: 0.0002) | time = 1.3 sec |

| Valid Minimum | Below EDM threshold (goal x 10) |

| No parameters at limit | Below call limit |

| Hesse ok | Covariance accurate |

| Name | Value | Hesse Error | Minos Error- | Minos Error+ | Limit- | Limit+ | Fixed | |

|---|---|---|---|---|---|---|---|---|

| 0 | s1 | 3.13e3 | 0.08e3 | 0 | ||||

| 1 | b1 | 4.07e3 | 0.09e3 | 0 | ||||

| 2 | mu | 0.4976 | 0.0019 | 0 | ||||

| 3 | sigma | 0.0978 | 0.0018 | 0 | ||||

| 4 | slope | 0.501 | 0.015 | 0 | ||||

| 5 | s2 | 1.81e3 | 0.05e3 | 0 | ||||

| 6 | b2 | 960 | 40 | 0 |

| s1 | b1 | mu | sigma | slope | s2 | b2 | |

|---|---|---|---|---|---|---|---|

| s1 | 7.03e+03 | -4e3 (-0.507) | -4.5308e-3 (-0.029) | 62.5754e-3 (0.403) | -458.83e-3 (-0.358) | 0.6e3 (0.142) | -0.6e3 (-0.165) |

| b1 | -4e3 (-0.507) | 7.52e+03 | 7.6545e-3 (0.048) | -60.1401e-3 (-0.375) | 377.01e-3 (0.284) | -0.6e3 (-0.129) | 0.5e3 (0.142) |

| mu | -4.5308e-3 (-0.029) | 7.6545e-3 (0.048) | 3.45e-06 | -0.4e-6 (-0.106) | -3.3e-6 (-0.117) | -5.8080e-3 (-0.061) | 6.5633e-3 (0.083) |

| sigma | 62.5754e-3 (0.403) | -60.1401e-3 (-0.375) | -0.4e-6 (-0.106) | 3.42e-06 | -8.1e-6 (-0.287) | 25.8622e-3 (0.273) | -25.9445e-3 (-0.330) |

| slope | -458.83e-3 (-0.358) | 377.01e-3 (0.284) | -3.3e-6 (-0.117) | -8.1e-6 (-0.287) | 0.000234 | -137.73e-3 (-0.176) | 125.24e-3 (0.192) |

| s2 | 0.6e3 (0.142) | -0.6e3 (-0.129) | -5.8080e-3 (-0.061) | 25.8622e-3 (0.273) | -137.73e-3 (-0.176) | 2.62e+03 | -0.9e3 (-0.406) |

| b2 | -0.6e3 (-0.165) | 0.5e3 (0.142) | 6.5633e-3 (0.083) | -25.9445e-3 (-0.330) | 125.24e-3 (0.192) | -0.9e3 (-0.406) | 1.81e+03 |

To fit \(H_1\) with iminuit, we could follow the same strategy, but there is a better way. Since none of the parameters are shared now, it is equivalent to optimize the log-likelihood for each dataset separately and add the results. We avoid optimizing a combined likelihood and renaming model parameters.

nll = ExtendedUnbinnedNLL(m1, model)

mi1 = Minuit(nll, s=1000, b=1000, mu=0.5, sigma=0.1, slope=0.5)

mi1.strategy = 0

mi1.migrad()| Migrad | |

|---|---|

| FCN = -1.163e+05 | Nfcn = 132 |

| EDM = 3.78e-06 (Goal: 0.0002) | time = 0.3 sec |

| Valid Minimum | Below EDM threshold (goal x 10) |

| No parameters at limit | Below call limit |

| Hesse ok | Covariance accurate |

| Name | Value | Hesse Error | Minos Error- | Minos Error+ | Limit- | Limit+ | Fixed | |

|---|---|---|---|---|---|---|---|---|

| 0 | s | 3.10e3 | 0.09e3 | 0 | ||||

| 1 | b | 4.09e3 | 0.09e3 | 0 | ||||

| 2 | mu | 0.5014 | 0.0026 | 0 | ||||

| 3 | sigma | 0.0967 | 0.0025 | 0 | ||||

| 4 | slope | 0.500 | 0.018 | 0 |

| s | b | mu | sigma | slope | |

|---|---|---|---|---|---|

| s | 7.97e+03 | -5e3 (-0.561) | -11.426e-3 (-0.050) | 110.353e-3 (0.487) | -638.31e-3 (-0.386) |

| b | -5e3 (-0.561) | 8.26e+03 | 6.357e-3 (0.027) | -113.163e-3 (-0.490) | 596.99e-3 (0.355) |

| mu | -11.426e-3 (-0.050) | 6.357e-3 (0.027) | 6.54e-06 | -0e-6 (-0.070) | -9e-6 (-0.191) |

| sigma | 110.353e-3 (0.487) | -113.163e-3 (-0.490) | -0e-6 (-0.070) | 6.45e-06 | -14e-6 (-0.295) |

| slope | -638.31e-3 (-0.386) | 596.99e-3 (0.355) | -9e-6 (-0.191) | -14e-6 (-0.295) | 0.000343 |

We can safely ignore the remark that the covariance matrix is approximate.

nll = ExtendedUnbinnedNLL(m2, model)

mi2 = Minuit(nll, s=1000, b=1000, mu=0.5, sigma=0.1, slope=0.5)

mi2.strategy = 0

mi2.migrad()| Migrad | |

|---|---|

| FCN = -4.043e+04 | Nfcn = 102 |

| EDM = 5.79e-05 (Goal: 0.0002) | time = 0.2 sec |

| Valid Minimum | Below EDM threshold (goal x 10) |

| No parameters at limit | Below call limit |

| Hesse ok | Covariance accurate |

| Name | Value | Hesse Error | Minos Error- | Minos Error+ | Limit- | Limit+ | Fixed | |

|---|---|---|---|---|---|---|---|---|

| 0 | s | 1.83e3 | 0.06e3 | 0 | ||||

| 1 | b | 940 | 50 | 0 | ||||

| 2 | mu | 0.4928 | 0.0032 | 0 | ||||

| 3 | sigma | 0.0990 | 0.0028 | 0 | ||||

| 4 | slope | 0.50 | 0.04 | 0 |

| s | b | mu | sigma | slope | |

|---|---|---|---|---|---|

| s | 3.19e+03 | -1.4e3 (-0.498) | 3.115e-3 (0.018) | 61.094e-3 (0.392) | -0.7619 (-0.340) |

| b | -1.4e3 (-0.498) | 2.31e+03 | -970e-6 (-0.006) | -61.182e-3 (-0.462) | 0.7594 (0.399) |

| mu | 3.115e-3 (0.018) | -970e-6 (-0.006) | 9.93e-06 | -1e-6 (-0.058) | -29e-6 (-0.233) |

| sigma | 61.094e-3 (0.392) | -61.182e-3 (-0.462) | -1e-6 (-0.058) | 7.61e-06 | -31e-6 (-0.285) |

| slope | -0.7619 (-0.340) | 0.7594 (0.399) | -29e-6 (-0.233) | -31e-6 (-0.285) | 0.00157 |

The class ExtendedUnbinnedNLL computes the equivalent of \(-2 \ln L\). Thus, to compute the \(Q\) statistic, we therefore can add the minimum values of the two cost functions for \(H_1\) and subtract them from \(H_0\). The degrees of freedom are obtained by subtracting the number parameters for \(H_0\) from \(H_1\).

# difference in number of parameters for H1 and H0

ndof = (mi1.nfit + mi2.nfit) - mi0.nfit

# test statistic, which is asymptotically chi-square distributed

q = mi0.fval - (mi1.fval + mi2.fval)

pvalue = chi2(ndof).sf(q)

pvalue0.16428688931432733With a chance probability of 26 % we won’t reject the null.

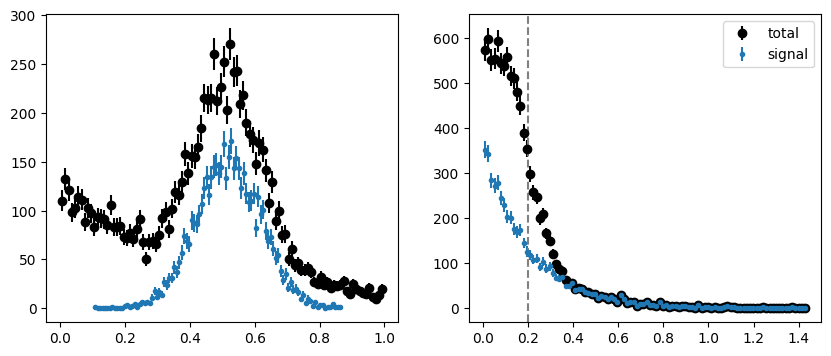

Let’s try this again for a toy dataset where the factorization requirement is not fulfilled. We artificially make the mean of the signal PDF in \(m\) a function of the \(t\) variable. This breaks factorization, because the parameter \(\mu\) is now a function of \(t\).

# save the original toy for later

toy_original = (toy[0].copy(), toy[1].copy(), toy[2].copy())

# modify the toy to introduce non-factorization

tm = toy[2]

toy[0][tm] += 0.1 * toy[1][tm]

fig, ax = plt.subplots(1, 2, figsize=(10, 4))

plt.sca(ax[0])

plot_binned(toy[0], bins=100, color="k", label="total")

plot_binned(toy[0][toy[2]], bins=100, marker=".", color="C0", label="signal")

plt.sca(ax[1])

plot_binned(toy[1], bins=100, color="k", label="total")

plot_binned(toy[1][toy[2]], bins=100, marker=".", color="C0", label="signal")

plt.axvline(tsplit, ls="--", color="0.5")

plt.legend();

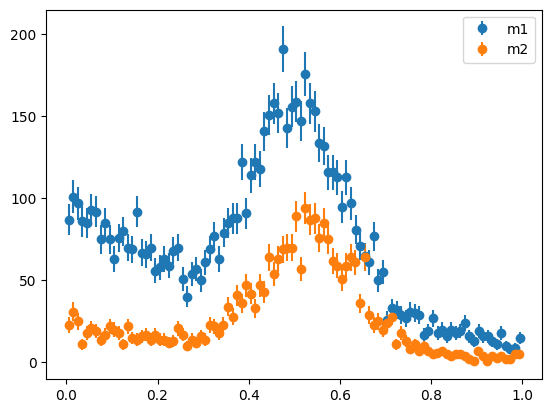

This change is hardly noticeable when we plot the full dataset. It becomes more apparent when we plot the distributions after splitting (we split in the same way as before).

mask = toy[1] < tsplit

m1 = toy[0][mask]

m2 = toy[0][~mask]

plot_binned(m1, bins=100, label="m1")

plot_binned(m2, bins=100, label="m2")

plt.legend();

We can see now that the peaks are not at the same place, but the deviation could be more subtle. A change in the width of the peak or the slope of the background would be harder to detect by eye. Or there could be several changes in the shape variables at once, each individually too small to be noticeable.

In any case, we need a p-value to quantify whether such a deviation is significant, which is provided by the likelihood-ratio test.

nll1 = ExtendedUnbinnedNLL(m1, make_with_signature(model, s="s1", b="b1"))

nll2 = ExtendedUnbinnedNLL(m2, make_with_signature(model, s="s2", b="b2"))

mi0 = Minuit(

nll1 + nll2,

s1=1000,

s2=1000,

b1=1000,

b2=1000,

mu=0.5,

sigma=0.1,

slope=0.5,

)

mi0.strategy = 0 # sufficient since we don't need accurate parameter errors

mi0.migrad()| Migrad | |

|---|---|

| FCN = -1.565e+05 | Nfcn = 201 |

| EDM = 7.62e-07 (Goal: 0.0002) | time = 0.8 sec |

| Valid Minimum | Below EDM threshold (goal x 10) |

| No parameters at limit | Below call limit |

| Hesse ok | Covariance accurate |

| Name | Value | Hesse Error | Minos Error- | Minos Error+ | Limit- | Limit+ | Fixed | |

|---|---|---|---|---|---|---|---|---|

| 0 | s1 | 3.14e3 | 0.08e3 | 0 | ||||

| 1 | b1 | 4.06e3 | 0.09e3 | 0 | ||||

| 2 | mu | 0.5199 | 0.0020 | 0 | ||||

| 3 | sigma | 0.1002 | 0.0019 | 0 | ||||

| 4 | slope | 0.489 | 0.016 | 0 | ||||

| 5 | s2 | 1.84e3 | 0.05e3 | 0 | ||||

| 6 | b2 | 930 | 40 | 0 |

| s1 | b1 | mu | sigma | slope | s2 | b2 | |

|---|---|---|---|---|---|---|---|

| s1 | 7.11e+03 | -4e3 (-0.548) | -14.102e-3 (-0.086) | 69.984e-3 (0.427) | -520.18e-3 (-0.381) | 0.7e3 (0.152) | -0.7e3 (-0.196) |

| b1 | -4e3 (-0.548) | 7.48e+03 | 12.494e-3 (0.074) | -70.781e-3 (-0.421) | 529.73e-3 (0.378) | -0.6e3 (-0.144) | 0.6e3 (0.177) |

| mu | -14.102e-3 (-0.086) | 12.494e-3 (0.074) | 3.82e-06 | -0e-6 (-0.074) | -4e-6 (-0.138) | -1.380e-3 (-0.014) | 789e-6 (0.010) |

| sigma | 69.984e-3 (0.427) | -70.781e-3 (-0.421) | -0e-6 (-0.074) | 3.78e-06 | -12e-6 (-0.371) | 29.984e-3 (0.301) | -28.380e-3 (-0.350) |

| slope | -520.18e-3 (-0.381) | 529.73e-3 (0.378) | -4e-6 (-0.138) | -12e-6 (-0.371) | 0.000262 | -163.01e-3 (-0.197) | 204.76e-3 (0.304) |

| s2 | 0.7e3 (0.152) | -0.6e3 (-0.144) | -1.380e-3 (-0.014) | 29.984e-3 (0.301) | -163.01e-3 (-0.197) | 2.63e+03 | -0.9e3 (-0.416) |

| b2 | -0.7e3 (-0.196) | 0.6e3 (0.177) | 789e-6 (0.010) | -28.380e-3 (-0.350) | 204.76e-3 (0.304) | -0.9e3 (-0.416) | 1.74e+03 |

nll = ExtendedUnbinnedNLL(m1, model)

mi1 = Minuit(nll, s=1000, b=1000, mu=0.5, sigma=0.1, slope=0.5)

mi1.strategy = 0

mi1.migrad()| Migrad | |

|---|---|

| FCN = -1.163e+05 | Nfcn = 118 |

| EDM = 7.28e-07 (Goal: 0.0002) | time = 0.3 sec |

| Valid Minimum | Below EDM threshold (goal x 10) |

| No parameters at limit | Below call limit |

| Hesse ok | Covariance accurate |

| Name | Value | Hesse Error | Minos Error- | Minos Error+ | Limit- | Limit+ | Fixed | |

|---|---|---|---|---|---|---|---|---|

| 0 | s | 3.12e3 | 0.09e3 | 0 | ||||

| 1 | b | 4.08e3 | 0.09e3 | 0 | ||||

| 2 | mu | 0.5095 | 0.0026 | 0 | ||||

| 3 | sigma | 0.0973 | 0.0026 | 0 | ||||

| 4 | slope | 0.499 | 0.019 | 0 |

| s | b | mu | sigma | slope | |

|---|---|---|---|---|---|

| s | 7.94e+03 | -4e3 (-0.547) | -16.057e-3 (-0.070) | 107.648e-3 (0.460) | -621.59e-3 (-0.374) |

| b | -4e3 (-0.547) | 8.41e+03 | 14.143e-3 (0.060) | -113.809e-3 (-0.473) | 675.24e-3 (0.395) |

| mu | -16.057e-3 (-0.070) | 14.143e-3 (0.060) | 6.64e-06 | -0e-6 (-0.061) | -9e-6 (-0.197) |

| sigma | 107.648e-3 (0.460) | -113.809e-3 (-0.473) | -0e-6 (-0.061) | 6.89e-06 | -16e-6 (-0.325) |

| slope | -621.59e-3 (-0.374) | 675.24e-3 (0.395) | -9e-6 (-0.197) | -16e-6 (-0.325) | 0.000347 |

nll = ExtendedUnbinnedNLL(m2, model)

mi2 = Minuit(nll, s=1000, b=1000, mu=0.5, sigma=0.1, slope=0.5)

mi2.strategy = 0

mi2.migrad()| Migrad | |

|---|---|

| FCN = -4.029e+04 | Nfcn = 103 |

| EDM = 0.000123 (Goal: 0.0002) | time = 0.2 sec |

| Valid Minimum | Below EDM threshold (goal x 10) |

| No parameters at limit | Below call limit |

| Hesse ok | Covariance accurate |

| Name | Value | Hesse Error | Minos Error- | Minos Error+ | Limit- | Limit+ | Fixed | |

|---|---|---|---|---|---|---|---|---|

| 0 | s | 1.83e3 | 0.06e3 | 0 | ||||

| 1 | b | 950 | 50 | 0 | ||||

| 2 | mu | 0.5331 | 0.0030 | 0 | ||||

| 3 | sigma | 0.1008 | 0.0028 | 0 | ||||

| 4 | slope | 0.50 | 0.04 | 0 |

| s | b | mu | sigma | slope | |

|---|---|---|---|---|---|

| s | 3.39e+03 | -1.5e3 (-0.528) | -4.411e-3 (-0.025) | 60.772e-3 (0.371) | -1.1197 (-0.437) |

| b | -1.5e3 (-0.528) | 2.48e+03 | 6.554e-3 (0.043) | -62.618e-3 (-0.448) | 1.1090 (0.507) |

| mu | -4.411e-3 (-0.025) | 6.554e-3 (0.043) | 9.19e-06 | -1e-6 (-0.112) | -18e-6 (-0.137) |

| sigma | 60.772e-3 (0.371) | -62.618e-3 (-0.448) | -1e-6 (-0.112) | 7.89e-06 | -39e-6 (-0.319) |

| slope | -1.1197 (-0.437) | 1.1090 (0.507) | -18e-6 (-0.137) | -39e-6 (-0.319) | 0.00193 |

# difference in number of parameters for H1 and H0

ndof = (mi1.nfit + mi2.nfit) - mi0.nfit

# test statistic, which is asymptotically chi-square distributed

q = mi0.fval - (mi1.fval + mi2.fval)

pvalue = chi2(ndof).sf(q)

pvalue1.253769135376934e-08norm.isf(pvalue)5.572746842381205Now the p-value is \(1.7\times 10^{-9}\), equivalent to a \(5.9\,\sigma\) deviation from a normal distribution. In this case, we should reject the null hypothesis.

Alternative: Kendall’s tau test

The Kendall’s tau test is a simple test you can apply if you have pure samples from the signal and background components available. In general, you won’t have these, because if you did, you would not need sWeights in the first place.

A pure background sample can be obtained from data, by selecting samples from the side-bands around the signal peak. For the signal, you can usually only get it from Monte-Carlo simulation of the experiment. You need to trust the simulation then.

The Kendall’s tau test checks whether two variables are independent and returns a p-value for that null hypothesis. You should apply this test to each component. If the p-values for a component is very small, you cannot compute classic sWeights, although you can still use COWs after expanding the component into many sub-components. To apply the classic sWeights, all components must pass the test.

We first apply the test to the signal and background samples in the original toy, where these components factorize, to see that it passes.

from sweights.independence import kendall_tau

m, t, truth_mask = toy_original

m_sig = m[truth_mask]

t_sig = t[truth_mask]

val, err, pvalue = kendall_tau(m_sig, t_sig)

pvalue0.09029195168931155m_bkg = m[~truth_mask]

t_bkg = t[~truth_mask]

val, err, pvalue = kendall_tau(m_bkg, t_bkg)

pvalue0.7015075243963227Both components pass the test, neither p-value is very small (\(\ll 0.01\)).

Warning: As already said, you cannot apply the test to the mixed sample. The mixed sample does not factorize, even if the components separately factorize.

val, err, pvalue = kendall_tau(m, t)

pvalue0.00588671692380863Now we apply the test to the modified toy which violates the factorization requirement.

m, t, truth_mask = toy

m_sig = m[truth_mask]

t_sig = t[truth_mask]

val, err, pvalue = kendall_tau(m_sig, t_sig)

pvalue1.9412926161716674e-24m_bkg = m[~truth_mask]

t_bkg = t[~truth_mask]

val, err, pvalue = kendall_tau(m_bkg, t_bkg)

pvalue0.7015075243963227We find that the signal component does not pass the factorization test, but the background does. That is exactly what we expect, since we only modified the signal component.

As mentioned, a pure background sample can also be obtained by selecting events from the side bands without knowing true labels. We see that a sample from the side band passes our test, even though the signal region would fail it.

ma = (m < 0.2) | (m > 0.8)

m_bkg = m[ma]

t_bkg = t[ma]

val, err, pvalue = kendall_tau(m_bkg, t_bkg)

pvalue0.3855929053284022